The DHIS2 Annual Conference takes place from 15-18 June 2026! Learn more

DHIS2 Resources: Learn, Discuss & Implement

Whether you are just getting started with DHIS2 or are working with an existing implementation, you can find a large variety of helpful resources here

Jump to a section on this page

Download & install DHIS2 software

Visit our DHIS2 Downloads page for links to downloadable files for DHIS2 core software, Android app, and metadata packages, as well as guidance on installing DHIS2 and helpful links to additional resources.

Find the answer with DHIS2 Documentation

Wondering how to get started with a new DHIS2 project? Or have a specific technical task you need to learn? On our DHIS2 Documentation site you can find comprehensive guidance for implementing, updating, managing and using DHIS2 core software, applications (including Android and Tracker), metadata packages, as well as guides for developing your own applications within the DHIS2 platform, or connecting external applications to your DHIS2 system.

The Documentation site also includes the Tutorials on technical topics that were previously posted on dhis2.org website (including how to analyzing Postgresql logs and how to sign up for the Google Earth engine).

DHIS2 documentation is written by implementation experts and developers from the core DHIS2 software team and our global HISP network based on best practices and lessons learned from real-world use cases. Documentation is available in English and has been translated into a selection of languages. In keeping with DHIS2’s open-source philosophy, we welcome edits, additions, and translations of our documentation from all members of the DHIS2 community.

Get support for your DHIS2 project

There are various ways you can get support for your DHIS2 project, including through the DHIS2 Community of Practice and by contacting our expert HISP network. You can also report bugs and request new features, and contact the DHIS2 core team to explore potential partnerships.

Explore your server hosting options

DHIS2 is shared under an open source license and is free for everyone to install and use. However, managing a DHIS2 instance involves setting up and hosting a web server. The choice of server is up to each organization, but we provide some information and suggestions based on our experience that can help you make an informed choice for your hosting solution.

Get started with DHIS2 development

Are you interested in contributing to the development of the open-source DHIS2 software? Or are you considering developing a custom web or Android application to use with your DHIS2 instance? We have assembled a collection of useful information and resources to help you get started.

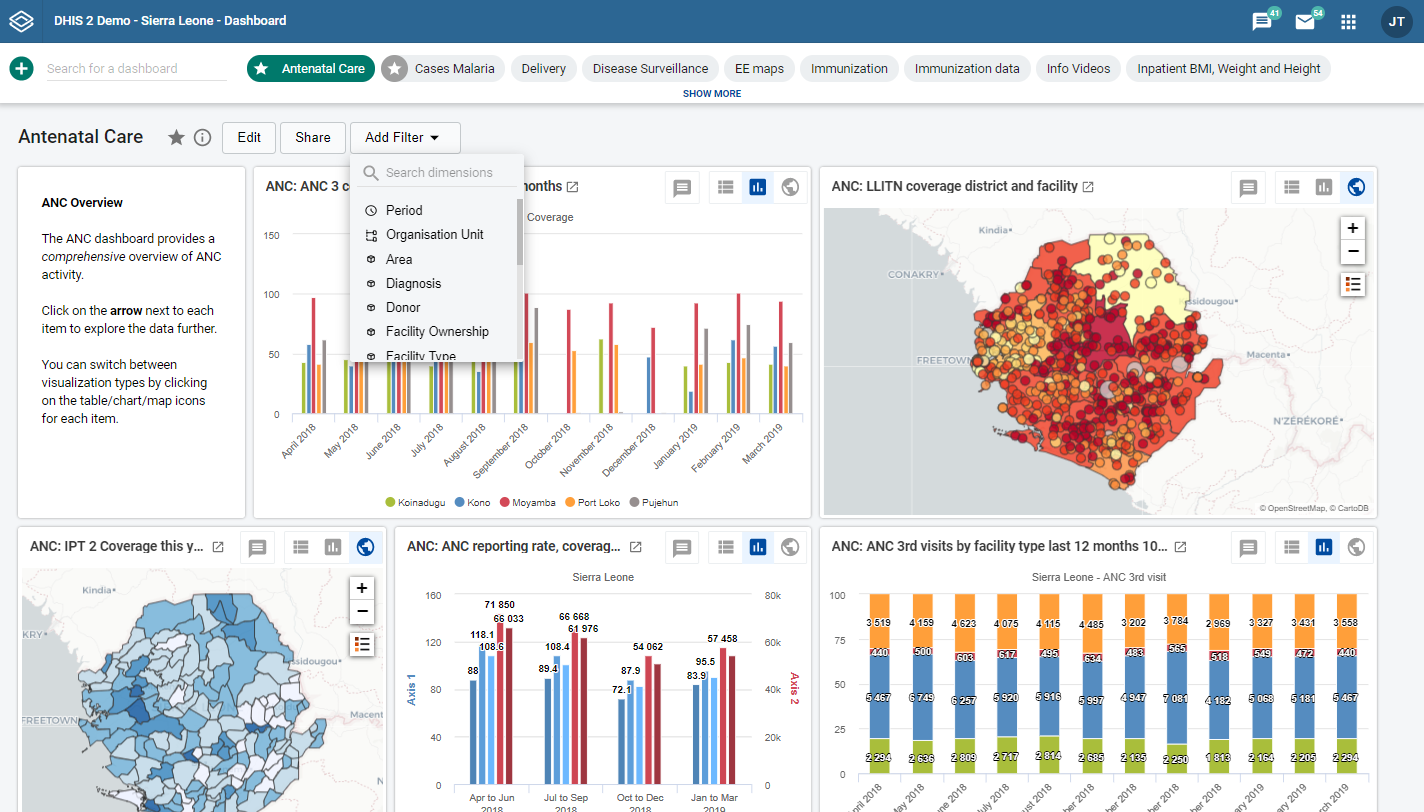

Explore DHIS2 with our interactive demos

DHIS2 provides a selection of demo configurations and databases for you to use to explore the capabilities and features of our software, including various software versions and program configurations. You can test out DHIS2 using both our web interface and Android mobile app.

Develop your skills with the DHIS2 Academy

The DHIS2 Academy program aims to strengthen national and regional capacity to successfully set up, design and maintain DHIS2 systems. Through a combination of online courses and intensive in-person training that includes both theoretical and practical sessions, you will become a DHIS2 expert ready to support your organization’s data collection, analysis, and reporting needs using DHIS2. This capacity building program provides a better understanding of the available DHIS2 tools and best practices, and is regularly updated to include new concepts, program areas, and system features.

Watch informative videos on DHIS2

We have produced a wide range of video content that demonstrates DHIS2 features and use cases, as well as walkthroughs of common tasks within DHIS2 and instructional videos that review complex processes in depth. You can browse the complete collection of DHIS2 videos on our YouTube channel. To watch videos on software features from the latest DHIS2 software releases, visit our Feature Spotlight page.

See how DHIS2 makes an impact around the world

We collect and share DHIS2 impact stories from around the world to highlight how Ministries of Health and other organizations have used DHIS2 to make a positive impact in health programs, education management, and other areas. These stories often highlight local innovations and customizations that are available for the larger community, sharing knowledge and best practices to improve the use of and impact from DHIS2 worldwide.

Information systems research and DHIS2

DHIS2 is not just a software platform. It is also part of a decades-long participatory action research project on health information system strengthening led by the HISP Centre at the University of Oslo. Visit our research page to learn more about the project, and find links to key articles and publications by the HISP research team.

Additional training courses from data.org

HISP UiO is pleased to share several free, self-paced online courses designed by the data.org team. We have reviewed this training material, and believe that these courses could be valuable resources for the global DHIS2 community.

- Ethical AI in Practice: Register

- Introduction to Responsible Data Management: Register

- Data Storytelling: Health-Focused Climate Communication: Register

- Advancing in Artificial Intelligence Course (in Spanish only): Register

About data.org

data.org is accelerating the power of data and AI to solve some of the world’s biggest problems. By 2032, we intend to train a workforce of one million purpose-driven data practitioners to help improve the quality of life for people everywhere. Through leading innovation challenges that surface and scale breakthrough ideas, and by elevating the most effective tools and strategies, we are actively building the field of data for social impact. As a platform for partnerships advancing individuals and organizations on their digital transformation journey, we are democratizing access to resources such as this course for free.

You can learn more about these courses on the DHIS2 Community of Practice.